Welcome to the first tutorial about DirectX10. For people who could be new in this programming-side, we can say DirectX10 it’s an API, a library given by some programmers, with which it’s possible build games and graphics-application, for PC but also for some consoles (XBOX360, for example)

Togheter with the open source library OpenGL, it’s used for 99% (but mabye also 100%) of all graphics application for pc and cosoles.

DirectX offers some components and functions that let us manage 2D and 3D graphics, music and sounds, input from keyboard, mouse and joysticks, and also some network features (to help us build multiplayer games). With passing of years DirectX evolved itself signing the developing of hardware, and in particoular video card’s one, that are evolving togheter DirectX.

The new version (D3D10) it’s, without doubles, the one which gave a cut with the past, deleting anything that was consideret deprecated in the old versions.

Who comes from old versions will see immediatly many differences, like the absence of Lost Device (the resource managment that now it’s totally automatic) and of Caps (a structure that describes the support level of a video card with DirectX9, now are vanished becouse all the video card compatible with DirectX10 will support the entire library)

Another important change, the reason for which I start the tutorial series without the famous “Hello World”, it’s the obliteration of fixed pipeline.

Now i’ll show you the evoulution of DirectX from DirectX7 to 10.

DirectX7 used the fixed pipeline to control the 3D object. The fixed pipeline it’s an algoritm block defined in DirectX that, starting from the scene portraying, they generated the final image on the screen. The programmer was able only to decide the main characteristics, like the lights’ number, the alphablending, the position. All that was not defined in the fixed pipeline had to be managed with the CPU: then many effects were not able to be done.

(Half Life Valve developed with DirectX7 by Valve in 1998)

DirectX8 introduced the shader technology. A shader it a code fragment that replaces the fixed pipeline in 3D model elaboration. There are 2 types:

- Vertex Shader, that manages 3D object’s vertices

- Pixel shader, that manages 3D object’s pixels

The programmers, now, were able to create new effects with the shaders, becouse all runned under GPU. The quality of games improved much, even if there were limits in the DirectX8 shaders.

(Max Payne developer in DirectX8 by Remedy Entertainment in 2001)

With DirectX the shader find their maturity. Borns HLSL, a C-Like language that simplifies the shader writing and all limits were eliminated.

(Unreal Tournament 3 developed by Epic in DirectX9, will come out for PC in 2007)

DirectX10 inserts other features and a new shader type: Geometry Shader. This new shader let us to do a new sort of thing: add or remove primitives using video card hardware. This, with other features, will let us to narrow in extreme the CPU using and touch graphics qualities near the photorealism.

(The Graphics engine CryEngine 2,in developing state from CryTek, uses DirectX10)

We will study, after, all other improvements.

Introduction to real time applications.

A real time application it’s a software that creates images in sequences enough quickly to cheat our eyes, and giving us the impression that those are in movement. A 16 fps (frame per second) speed it’s enough for our scope but increasing that we will have a better fluency (usually the games go at 30-60 fps). There are 2 kinds of graphics: 2D and 3D. The 2D graphics it’ based on the movement of memory sides: just load an image and copy it on an memory area that will go on the screen. The 3D graphis works in a different mode.

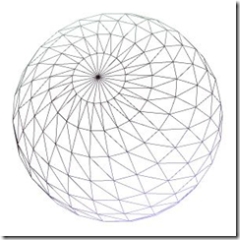

A 3D object (also called mesh) it’s a triangles block disposed in the space. An object could be rounded in the best way, but if we go more near to it we will se that it’s ever composed with triangles. A cube, for example, has got 6 squares faces and each one it’s build with at least 2 triangles. 12 triangles are enough to generate our cube. Each triangle it’s composet from 3 vertices.

The graphics libraries takes this informations, use that and send results to video.

This process is called Rendering. The 3D object are stored in particoular structures.

- Vertex Buffer contains the points that are part of 3D model. They take with them, beyond the position, also other numeric values that can be used in various modes.

- Index Buffer contains the order with which the points are taken to form 3D model. This becouse much vertices are used more times and with this system we can avoid repetitions. Take, for example, a cube. With 12 triangles the mesh should contain 36 vertices that, considering 3 floats to rapresent XYZ coordinates, weigh 12bytes x 36 vertices = 432 bytes, but in reality only 8 vertices are unic (96 bytes). Using a short array that will order them we will use ony: 36 * sizeof(short) + (12 * 8) = 168 bytes versus 436.

The mesh are usually created with editors,( but noone deny us to create them by code, using vertex buffer) and loaded from file. At loading moment they are in the position given by the editor, but the rendering will locate the mesh in the right place and will show it on our screen.

A special thanks goes to Vincent who translate this lesson.